Installer Ceph Store2

URLs

Dashboard : https://ov002.pkgdata.net:8443/

Grafana : https://ov001.pkgdata.net:3000/

Infra

3 serveurs OVH Advance-Stor

CPU : AMD EPYC 4344P - 8c/16t - 3.8GHz/5.3GHz

RAM : 128GB DDR5 5200MHz

Disques : 2×960 GB SSD SATA + 4×22 TB HDD SAS

Installer les serveurs

OS

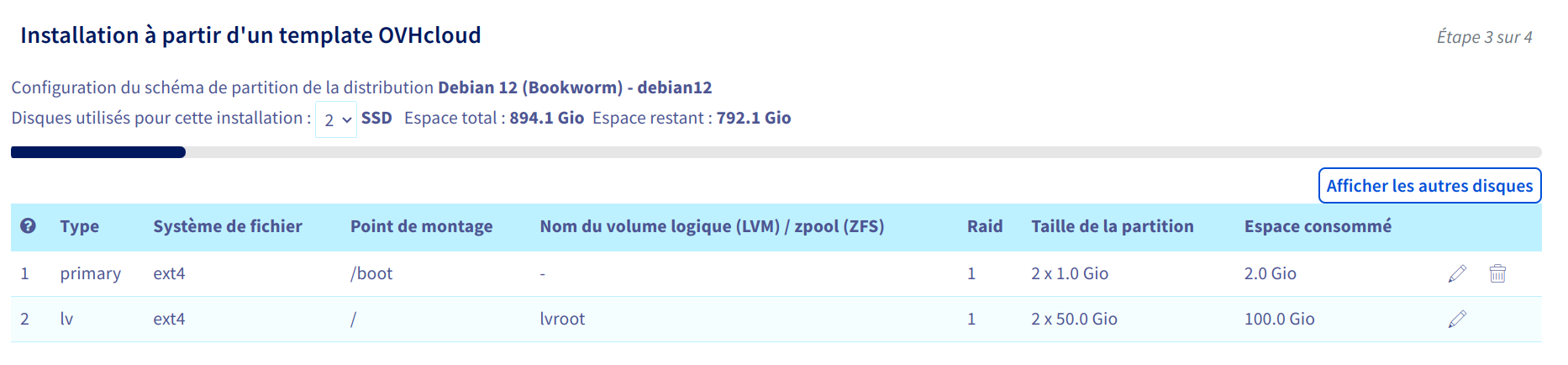

Partitionnement :

Ansible

Si besoin de debug ajouter : “-vvv -e ‘debops__no_log=false’”

ansible-playbook -i inventory/000_hosts playbooks/debops_bootstrap.yml --vault-id @prompt --flush-cache --user debian --limit ceph_store2

ansible-playbook -i inventory/000_hosts playbooks/debops_site.yml --vault-id @prompt --flush-cache --limit ceph_store2

reboot

Tuning Performance Disque SAS

Mettre à jour les firmwares

openSeaChest_Firmware -d all --modelMatch ST22000NM004E --fwdlInfo | grep Revision

openSeaChest_Firmware -d all --modelMatch ST22000NM004E --downloadFW LongsPeakBPExosX22SAS-SED-512E-ET02.LOD

openSeaChest_Firmware -d all --modelMatch ST22000NM004E --downloadFW LongsPeakBPExosX22SAS-SED-512E-ET03.LOD

Désactiver Write Cache (passe de 130MB/s à 200MB/s en écriture)

openSeaChest_Configure -d all --modelMatch ST22000NM004E --writeCache info

openSeaChest_Configure -d all --modelMatch ST22000NM004E --writeCache disable

for i in $(seq 0 3); do echo "write through" > /sys/class/scsi_disk/0\:0\:$i\:0/cache_type; done

Ceph

CEPH_RELEASE=18.2.2 ; curl --silent --remote-name --location https://download.ceph.com/rpm-${CEPH_RELEASE}/el9/noarch/cephadm

chmod +x cephadm

sudo ./cephadm add-repo --release reef

sudo chmod 644 /etc/apt/trusted.gpg.d/ceph.release.gpg

sudo ./cephadm add-repo --release reef

sudo ./cephadm install cephadm ceph ceph-volume ceph-fuse

rm cephadm

Configurer Ceph

cephadm bootstrap --mon-id ov001.cephstore2 --mon-ip 192.168.1.1 --cluster-network 192.168.1.0/24

Copier le contenu de /etc/ceph/ceph.pub dans ~/work/infras/inventory/group_vars/ceph_store2/debops_users.yml puis lancer :

ansible-playbook -i inventory/000_hosts playbooks/debops_site.yml --vault-id @prompt --flush-cache --limit ceph_backup --tags role::root_account

ceph orch host add ov002 192.168.1.2 --labels _admin

ceph orch host add ov003 192.168.1.3 --labels _admin

Sur ov001 :

for i in $(seq 0 3); do lvcreate -L 50GB -n db-$i vg; done

ceph orch daemon add osd ov001:data_devices=/dev/sda,db_devices=/dev/vg/db-0,osds_per_device=1

ceph orch daemon add osd ov001:data_devices=/dev/sdb,db_devices=/dev/vg/db-1,osds_per_device=1

ceph orch daemon add osd ov001:data_devices=/dev/sdc,db_devices=/dev/vg/db-2,osds_per_device=1

ceph orch daemon add osd ov001:data_devices=/dev/sdd,db_devices=/dev/vg/db-3,osds_per_device=1

Sur ov002 :

for i in $(seq 0 3); do lvcreate -L 50GB -n db-$i vg; done

ceph orch daemon add osd ov002:data_devices=/dev/sda,db_devices=/dev/vg/db-0,osds_per_device=1

ceph orch daemon add osd ov002:data_devices=/dev/sdb,db_devices=/dev/vg/db-1,osds_per_device=1

ceph orch daemon add osd ov002:data_devices=/dev/sdc,db_devices=/dev/vg/db-2,osds_per_device=1

ceph orch daemon add osd ov002:data_devices=/dev/sdd,db_devices=/dev/vg/db-3,osds_per_device=1

Sur ov003 :

for i in $(seq 0 3); do lvcreate -L 50GB -n db-$i vg; done

ceph orch daemon add osd ov003:data_devices=/dev/sda,db_devices=/dev/vg/db-0,osds_per_device=1

ceph orch daemon add osd ov003:data_devices=/dev/sdb,db_devices=/dev/vg/db-1,osds_per_device=1

ceph orch daemon add osd ov003:data_devices=/dev/sdc,db_devices=/dev/vg/db-2,osds_per_device=1

ceph orch daemon add osd ov003:data_devices=/dev/sdd,db_devices=/dev/vg/db-3,osds_per_device=1

ceph fs volume create axonaut ceph orch apply nfs axonaut ceph nfs export create cephfs –cluster-id axonaut –pseudo-path /axonaut –fsname axonaut

ceph fs snap-schedule activate ceph mgr module enable snap_schedule

Object Gateway Service (RGW)

# radosgw-admin realm list

radosgw-admin realm create --rgw-realm=eu-west --default

radosgw-admin realm default --rgw-realm=eu-west

radosgw-admin zonegroup create --rgw-zonegroup=ovh --rgw-realm=eu-west --master --default

radosgw-admin zonegroup default --rgw-zonegroup=ovh

radosgw-admin zone create --rgw-zone=store2 --rgw-zonegroup=ovh --master --default

radosgw-admin zone default --rgw-zone=store2

radosgw-admin period update --commit

ceph orch apply rgw store2 --realm=eu-west --zonegroup=ovh --zone=store2

ceph dashboard set-rgw-credentials

Configurer HTTPS :

```ini

ceph config-key set rgw/cert/rgw.cephbackup -i /etc/pki/realms/pkgdata.backup/private/key_chain.pem

et vérifier :

ceph orch ls --service-type rgw --export

ceph config-key get rgw/cert/rgw.cephbackup

Ignorer la vérification du certificat depuis le dashboard

ceph dashboard set-rgw-api-ssl-verify False